Robots in 2024 are far more complex than their single-armed factory-working predecessors. Modern robots can run, jump, do the splits, and even hold down a basic conversation. At the same, despite decades of technical advancements and billions of dollars of investment, even the most advanced robot systems still struggle to do many everyday tasks humans take for granted like folding laundry or stacking blocks. Ironically, robots are quite bad at doing things we find easy. That’s the case for now, at least. New advances in robot training take some inspiration from massively popular large language models like ChatGPT may change that… eventually.

Robots are everywhere, but their abilities are limited

Robots are increasingly visible in everyday life. Factories and manufacturing facilities have used simple single-task robotic arms for decades to quickly ramp up production. In logistics, major brands like Amazon and Walmart are already having slightly more advanced robots work alongside humans to move heavy objects around and sort through packages. DHL uses Boston Dynamics’ “Stretch” robots to reach for boxes and move them onto conveyor systems. Some fast-casual restaurants like Denny’s have even experimented with multi-shelf delivery bots that shuffle plates of food to tables. Chipotle has its own AI-guided avocado-pitting prototype. Amazon alone reportedly already has over 750,000 robots in its operations, and that number is growing.

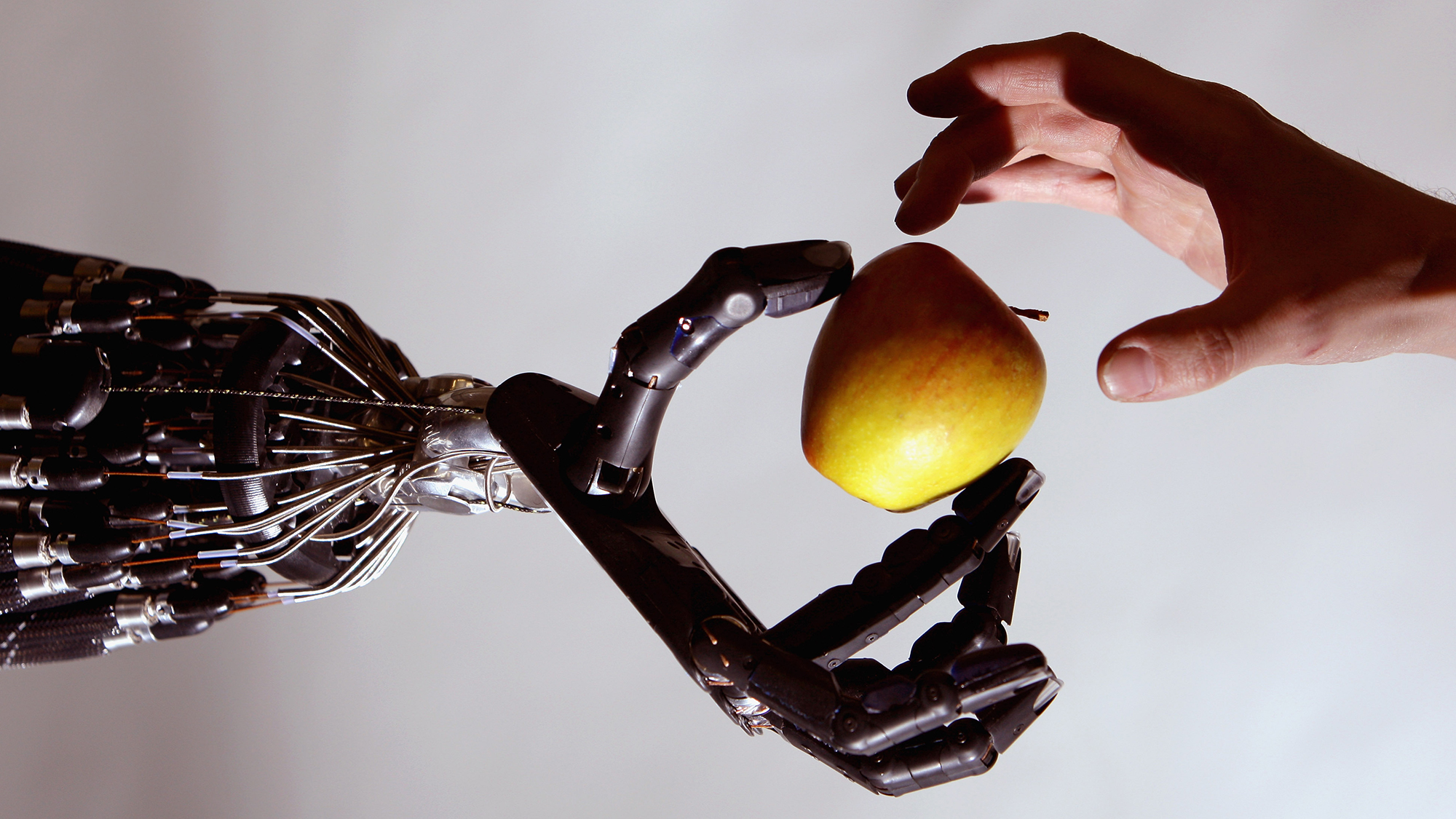

All of these systems are impressive in their own particular ways, but none of them can compete with a human when it comes to many mundane tasks. An advanced computer model with the right software could school even the most adept chess player easily, but it would take a feat of engineering to have that same robot pick out a single chess piece from a cluttered pile. Coffee-making robots could likely pour out brews at a faster clip than a human barista but it would struggle if asked to find an old, tepid cup somewhere in a room and zap it back to life in the microwave.

‘Robots can go all the way to Mars, but they can’t pick up the groceries’

In general, robots are good at many things humans struggle with and bad at many things humans find easy. This general observation, known by experts in the robotics world as “Moravec’s Paradox” dates all the way to a 1988 book by Carnegie Mellon professor Hans Moravec. It has remained frustratingly true nearly forty years on. So what exactly is going on here? Robotosist and University of California Berkeley Professor Ken Goldberg tried to break down what accounts for these “clumsy robots” during a TED Talk last year.

Goldbergsaid three primary challenges for robots are issues of perception, control, and physics. On the perception side, robots rely on cameras and other sensors like lidar to “see” the world around them. Though those tools are constantly improving, they still aren’t as reliable as human eyes. That’s why so-called self-driving cars have been known to make mistakes if exposed to bright flashing lights of light, or, as was the case in San Francisco last year, other cars with orange traffic cones on their hoods. Modern warehouse sorting robots like Amazon’s Sparrow, meanwhile, are quite adept when limited to their narrow parameters, according to a recent New York Times story, but reportedly struggle with more “targeted picking.”

[Related: Researchers tortured robots to test the limits of human empathy]

“An Amazon order could be anything from a pillow, to a book, to a hat, to a bicycle,” University of Cambridge robotics professor Fumiya Iida said in a statement. “For a human, it’s generally easy to pick up an item without dropping or crushing it—we instinctively know how much force to use. But this is really difficult for a robot.”

That leads to the second problem Goldberg points out: control. Though humans and many animals like dogs have benefited from millions of years of evolution to sync up our vision with our limbs, robots don’t have that same luxury. Cameras and sensors in one part of a robot can often get out of sync with the mechanical arms or grippers tasked with manipulation and objects. This mismatch can lead robots to suddenly drop objects. That’s part of the reason why the robot slinging sizzling plates of eggs and bacon to Denny’s customers only actually brings the plate to the table. A human waiter still has to actually grab the plates and hand them to the diner.

That hasn’t stopped some trying to imagine those capabilities into existence. During a widely watched press event at Warner Brothers Studios earlier this year, Tesla’s much-hyped “Optimus” humanoid robots sauntered throughout the event space checking IDs, whipping up cocktails, and striking up conversation with guests. In reality, those “autonomous” machines were about as real as the fake Hollywood set pieces surrounding them. Reporting following the event revealed the robots were actually teleoperated by nearby Tesla workers. But while that hyperbolic performance art is par for the course for Musk projects, it also points to a larger issue facing robotics. The type of tasks Optimus failed to perform at the event—maneuvering objects and pouring out simple mixed drinks—are actually notoriously difficult for robots generally.

Optimus make me a drink, please.

This is not wholly AI. A human is remote assisting.

Which means AI day next year where we will see how fast Optimus is learning. pic.twitter.com/CE2bEA2uQD

— Robert Scoble (@Scobleizer) October

11, 2024

The final issue, physics, is one that neither humans nor robots can really control. In his lecture, Goldberg gives the example of a robot pushing a bottle across a table. The robot used the same force and pushed the bottle, in the same way, every time, but it always ended up in a slightly different position. That variation depends in part on the microscopic surface topography of the table as the bottle slides across it. Humans deal with these slight variations many times every day but we instinctively understand how to correct for it through experience.

For the most part, robots also start to struggle as soon as they are tasked with doing anything outside of the narrow set of test environments it was crafted for. Though a human would probably be able to figure out how to escape from a random room, even a highly mobile robot would get confused and waste time looking for doors in nonsensical places like the floors and ceiling. Ironically, its slight nuances like these that are proving more difficult for robots to account for than seemingly much more impressive feats like lifting heavy objects or even space travel.

“Robots can go all the way to Mars, but they can’t pick up the groceries,” Iida added.

Teaching robots to learn from each other

That’s the general dilemma up until now, but researchers currently working on so-called “general-purpose robot brains” are hoping they can take some of the lessons learned from recent large language models and use them to make much more adaptable robots. The field of robotics has stalled compared to software and AI in recent years mainly due to a disparity in training data. LLMs like OpenAI’s GPT were able to make such large leaps because they were trained on trillions of parameters of articles, books, videos, and images scraped from the internet. Whether or not that was legal remains contested in court.

Legal questions aside, there’s no real equivalent to the internet when it comes to robot-training data. Since robots are physical objects, collecting data on how they perform the task often takes time and is reserved in laboratories or other limited spaces. Robots are also mostly task-specific, so data from a cargo-loading machine might not really help improve a robot picking out objects from a bin.

But several groups are now trying to see if it’s possible to essentially lump data collected from many different types of robots into one unified deep neural network that can then be used to train new, general-purpose robots. One of those efforts, called the RT-X project, is being pursued by researchers at Google, UC Berkeley, and 32 other labs across North America, Europe, and Asia. Those researchers have already created what they are calling the world’s “largest open-source dataset of real robotic actions in existence.”

The dataset includes real-world experiences from robots completing around 500 different types of tasks. Researchers can then take a robot and use deep learning to train it on that dataset in a simulated environment. Goldberg described processes like this as similar to a robot “dreaming.” In the RT-X case robots are able to identify training data relevant to their particular goal, such as improvements on mechanical arms, and use that to improve itself. Writing in IEEE Spectrum, Google researcher Sergey Levine and DeepMind scientist Karol Hausman compared this to a human learning to both ride a bicycle or drive a car using the same brain.

“The model trained on the RT-X dataset can simply recognize what kind of robot it’s controlling from what it sees in the robot’s own camera observation,” the researchers wrote.

Robotics are hopefully the more generalized “brains” that could scale up as more data is collected and possibly make new humanoid robots like those being produced by Figure and Tesla more capable of adapting to their environments. We’re already starting to get a glimpse of what that could look like. Last month, Boston Dynamics released a video showing its human-shaped Atlas robot locating grabbing, and moving engine covers around a demo room.

Atlas was able to complete those tasks, Boston Dynamics claims, completely autonomously and without any “prescribed or teleoperated movements.” Crucially, the demo even shows Atlas at times making mistakes, but then quickly adjusting and correcting for them on the fly.

Sure, it might now be making Negronis or folding laundry yet but it provides a glimpse of where the industry might be heading.